The rapid growth of decentralized financial applications (DeFi) and, more recently, non-fungible tokens (NFTs) have forced the Ethereum network to its knees several times. So-called sharding is expected to help Ethereum reach new heights in terms of throughput and transaction speeds.

Ethereum’s modular approach to blockchain design allows for optimisation within each component and eliminates some of the inefficiencies seen in monolithic blockchains. Both layer 2s and sharding will each increase throughput by 100x and the bandwidth is expected to grow 50% per year for at least the next decade. Will Ethereum reach 1 million transactions per second (TPS) this way?

The burden with the Blockchain-Trilemma

Blockchains are powerful networks that provide users with a shared digital ledger. However, the architecture behind these ledgers leads to a trade-off between decentralisation, security and scalability. This issue, where a blockchain must choose two of these three guarantees, is known as the “blockchain trilemma”. Traditionally, most blockchains, like Bitcoin (BTC) and Ethereum (ETH), have opted for decentralisation and security at the expense of scalability. In this report, we outline the steps Ethereum is taking to scale its blockchain without compromising on the other two attributes.

This trilemma has burdened the industry for years, with many alternative layer 1s opting to sacrifice decentralisation in favour of speed. While this trade-off has helped to make for a better user-experience, the outcome of centralised blockchains does not allow for the innovation, culture or benefits associated with a decentralised paradigm. There have been many attempts to solve this issue, including plasma and sidechains but these solutions also fell short of the design goals.

Task distribiution as a need to scale

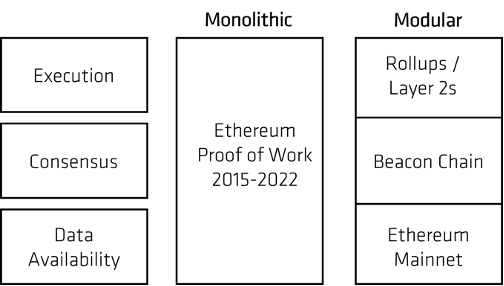

From a high-level perspective, blockchains perform three main tasks, execution, consensus and data availability. Execution is where all the transactions happen, consensus is where network participants agree on what has happened and data availability is guaranteeing that data is accessible to all. Since their inception, blockchains have been monolithic, that is, blockchains were expected to perform all three tasks under one chain. Over a decade later, there is now the rise of modular blockchain architectures. A modular blockchain utilises the concept of specialisation and chooses to separate these tasks into separate chains.

When Ethereum upgrades to Proof of Stake, expected in September 2022, there will be different chains for each task. Consensus will be handled by the Proof of Stake (PoS) Beacon Chain, data availability will be handled by the current Ethereum chain and execution will be handled by layer 2s. Layer 2’s are separate blockchains built on top of Ethereum (layer 1) that help to scale the layer 1 with faster throughput and lower fees all while preserving decentralisation and security.

Although there are already many layer 2s launched or in production, the scaling roadmap is far from complete. Ethereum currently handles around 10 TPS (transactions per second) so a 100x improvement is needed to achieve its scaling goals. For reference, a network like Visa can handle around 65,000 TPS. In order to scale to 1 million TPS, Ethereum will need a further three implementations – improved rollups, sharding and increased bandwidth. We discuss these technologies in greater detail below.

Rollups as layer 2 solutions for Ethereum

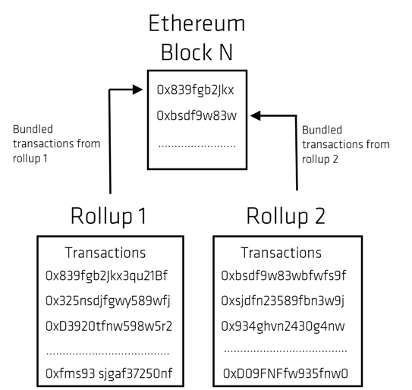

As mentioned above, Ethereum intends to scale its operation using rollups/layer 2s. These are separate blockchains that absorb the burden of executing transactions so that Ethereum can focus on consensus and data availability. Many transactions are executed on layer 2 and then rolled up into a single transaction which is subsequently posted to the Ethereum blockchain for verification. Ninety-nine percent of the gas fees are related to execution, hence moving execution off-chain saves more than just time. Below we illustrate a simplified relationship between transactions of different rollups and an Ethereum block.

Checking the validity of one bundled transaction can be an order of magnitude faster than executing each transaction individually. The bundling of many transactions into one, allows for more transactions per second (TPS), reduces network congestion and lowers gas fees for users. Moving the computation off-chain to a layer 2 provides greater scalability and allows for more optimisations around throughput and latency. Off-chain execution could scale the Ethereum network by a factor of 10x.

Furthermore, once roll-ups become decentralised, posting the transaction data back on-chain for verification, preserves the security and decentralisation benefits of the Ethereum network, hence resolving the issues around the blockchain trilemma. However, more optimisations are achievable with improved data compression techniques. Data compression involves reducing the number of details needed to represent the same underlying information. Posting compressed data to Ethereum further reduces the cost and could improve scalability by another 10x.

Data sharding and its opportunities

As mentioned above, layer 2s will handle execution, while Ethereum will focus on consensus and data availability, which is a critical component to both security and scalability. Sharding is a network architecture designed to scale data availability. Currently, every consensus participant must download all of the data from a block and independently verify the data before signing off on a block.

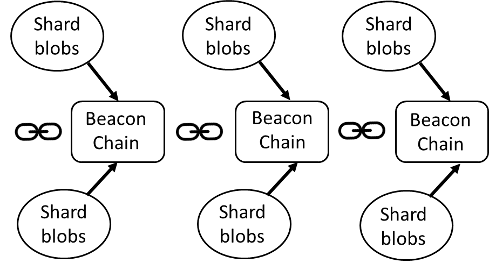

This is an inefficient process and a considerable bottleneck of the network. However, if this data is missing, the network will not be able to verify if blocks are valid. Hence data availability is the guarantee that the block proposer published all the necessary transaction data, and that the transaction data is available to other network participants. Sharding allows for network participants to only download a sample of all the data while simultaneously guaranteeing data availability. Given the complexity of this design, sharding will be completed in two phases, proto-danksharding and the full danksharding.

Proto-danksharding (also known as EIP-4844) is a proposal to implement most of the logic but not everything that makes up a complete danksharding specification. With proto-danksharding, each network participant must still independently verify that the whole set of data is still available. The primary innovation that proto-danksharding introduces is a new transaction type, referred to as a blob-carrying transaction. Blobs can be significantly cheaper for layer 2s to post to than the current transaction type and could improve scalability by a factor of 10x. Full danksharding introduces data availability sampling such that network participants only need a small fraction of the data to verify a block. The implementation of full danksharding could improve scalability by another 10x. Below we illustrate the relation between blobs and the Beacon chain.

Furthere achievements in relation to bandwith

There are various computational resources that a blockchain requires, namely, CPU cycles, storage, disk I/O and bandwidth. Ethereum intends to move to a stateless architecture, which does not need the first three resources but still requires bandwidth. This last component is different from the other two in the sense that it relies on the progress of external technological progress to help scale the network.

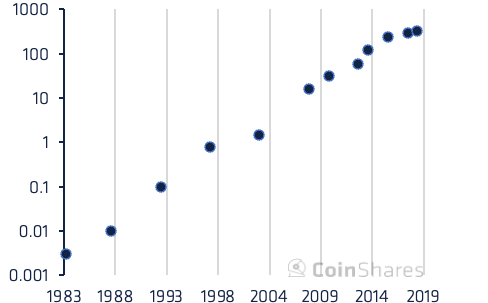

This is because consumer bandwidth tends to increase by roughly 50% every year (similar to Moore’s law). Given this exponential growth, it would take approximately five to six years to realise a 10x increase in bandwidth (and another 5 years for a 100x improvement). There is good reason to believe that bandwidth will continue to improve at this current pace since bandwidth is an inherently parallelisable process. Below we show the growth of bandwidth from 1983 to 2019.

Given the importance of scalability needed for the mass adoption of Ethereum, these technologies will become the main priorities once the Merge has successfully materialised (expected September 2022). However, given that this technology is only several years away, and given the average yearly growth of bandwidth, we find it reasonable to expect 1 million TPS in the next six years.